Is Gemini a good alternative to OpenAI’s ChatGPT?

4 mins | Dec 11, 2023

Yesterday, the Internet was abuzz with only one word, i.e. Gemini. What does this word actually mean? Many people who weren’t aware of the happenings in the Twitter world were left their heads scratching.

Well, Google's new AI model, Gemini, is integrated into its Bard chatbot. Gemini's capabilities improve Bard's understanding of user intent, which results in more accurate and high-quality responses. This upgraded model comes in three sizes: Nano, Pro, and Ultra, each catering to distinct user needs. Nano focuses on swift on-device tasks, while Pro serves as a versatile middle-ground, and Ultra stands out as the most robust option. However, the Ultra version is still undergoing safety checks and is slated for release next year.

Gemini Nano is Google's most efficient model built for on-device tasks. Currently, it is running on the Pixel 8 Pro smartphone. As the first smartphone engineered for Gemini Nano, it uses the power of Google Tensor G3 to deliver two expanded features: Summarize in Recorder and Smart Reply in Gboard.

But what do the two features actually do?

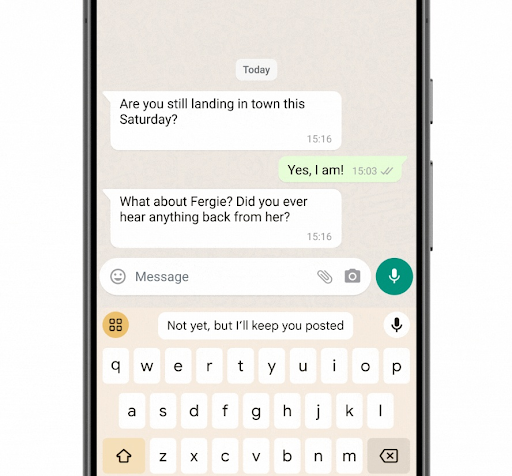

Well, the Smart Reply in Gboard suggests the next thing to say in a messaging app. It helps generate more relevant and natural responses than before.

How to use Smart Reply on a Pixel 8 Pro smartphone?

To use Smart Reply, you need to enable AiCore in the Developer Options in the Settings of your Pixel 8 Pro phone and then open a WhatsApp conversation. You can do this by navigating to Settings > Developer Options > AiCore Settings > Enable Aicore Persistent.

When enabled, you can see Gemini Nano-powered Smart Reply suggestions in the Gboard keyboard’s suggestion strip. Currently, this feature is available as a limited preview for US English in WhatsApp.

Coming to the second feature, Summarize in Recorder. With Gemini, the app can now generate summaries of a full meeting with just a click, giving you a quick overview of the main points and highlights. To use this feature, you just need to open the app and start recording. You can then tap on the summary button to see the Gemini Nano-generated summary of the audio.

With Gemini integrated into its system, Google Bard is looking to dominate the world of top AIs like OpenAI’s GPT-4 and Microsoft’s Co-pilot.

What features does Gemini have?

Gemini comes in three modes- Ultra, Pro, and Nano, which have many advanced features that make it an AI model to watch out for in the future.

The advanced AI model has features like:

Next-generation capabilities

Until now, the standard approach to creating multimodal models involved training separate components for different modalities and then stitching them together to roughly mimic some of this functionality. These models can sometimes be good at performing certain tasks, like describing images, but struggle with more conceptual and complex reasoning.

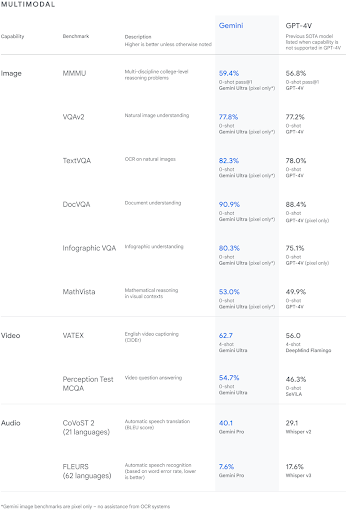

Google designed Gemini to be natively multimodal and pre-trained from the start on different modalities. Then we fine-tuned it with additional multimodal data to further refine its effectiveness. This helps Gemini seamlessly understand and reason about all kinds of inputs from the ground up, far better than existing multimodal models — and its capabilities are state of the art in nearly every domain.

Sophisticated reasoning

Gemini 1.0’s sophisticated multimodal reasoning capabilities can help make sense of complex written and visual information. This makes it uniquely skilled at uncovering knowledge that can be difficult to discern amid vast amounts of data.

Its remarkable ability to extract insights from hundreds of thousands of documents through reading, filtering, and understanding information will help deliver new breakthroughs at digital speeds in many fields, from science to finance.

Understanding text, images, audio and more

Gemini 1.0 was trained to recognize and understand text, images, audio, and more at the same time so it better understands nuanced information and can answer questions relating to complicated topics. This makes it especially good at explaining reasoning in complex subjects like math and physics.

Advanced coding

The first version of Gemini can understand, explain, and generate high-quality code in the world’s most popular programming languages, like Python, Java, C++, and Go. Its ability to work across languages and reason about complex information makes it one of the leading foundation models for coding in the world.

Gemini Ultra excels in several coding benchmarks, including HumanEval, an important industry standard for evaluating performance on coding tasks, and Natural2Code, an internally held-out dataset that uses author-generated sources instead of web-based information.

Gemini can also be used as the engine for more advanced coding systems. Two years ago, Google presented AlphaCode, the first AI code generation system to reach a competitive level of performance in programming competitions.

Using a specialized version of Gemini, Google created a more advanced code generation system, AlphaCode 2, which excels at solving competitive programming problems that go beyond coding to involve complex math and theoretical computer science.

Is Gemini better than ChatGPT and Co-pilot?

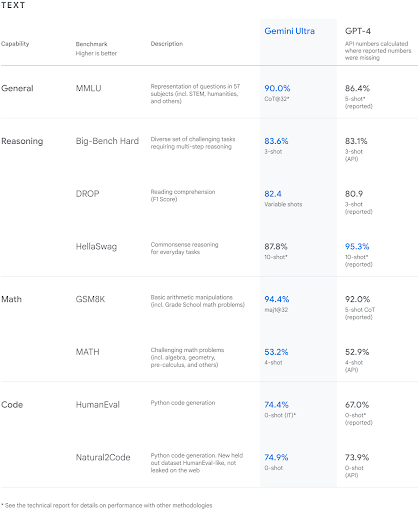

As per Google’s blog, when Gemini was compared to ChatGPT, it outperformed the AI model from OpenAI in 30 out of 32 benchmarks. These benchmarks encompass various domains, including reasoning, math, reading comprehension, and Python code generation.

The blog states, “Gemini Pro scored 79.13% versus GPT-3.5’s 70% at the MMLU or the Multi-Task Language Understanding benchmark test. In the GSM8K benchmark test, which judges arithmetic reasoning, Gemini Pro beat GPT-3.5’s 57.1% with a much higher 86.5%. At the HumanEval benchmarks, which are meant to test code generation, Gemini Pro beat GPT-3.5’s 48.1% with 67.7%. The only benchmark where GPT-3.5 fared better was MATH, where Gemini Pro scored 32.6% compared to GPT-3.5’s 34.1%.”

Additionally, the report states, “Gemini Ultra received a remarkable 90% in Massive Multitask Language Understanding (MMLU), showcasing its ability to comprehend 57 subjects, including STEM, humanities, and more. GPT-4, on the other hand, reported an 86.4%.

At reasoning, Gemini Ultra scored 83.6% in the Big-Bench Hard benchmark, demonstrating proficiency in diverse, multi-step reasoning tasks compared to GPT-4’s 83.1%. Gemini Ultra excelled with an 82.4 F1 Score in the DROP reading comprehension benchmark, while GPT-4 achieved an 80.9 3-shot capability in a similar scenario.

At Math, Gemini Ultra scored 94.4% at basic arithmetic manipulations, while GPT-4 scored a 92.0%.”

To prove its point that Gemini integrated with Google Bard is far ahead of all the other AI models in the market, the company teamed up with popular American Science Youtuber Mark Rober.

The video saw Mark Rober create a mind-blowing fusion of science and engineering with the help of Google Bard to craft a paper plane that'll soar to uncharted territories of aerodynamics.

Watch the video here:

Final Thoughts

Overall, Gemini integrated into Google Bard promises to be the next big thing in the AI space. Will it be able to disrupt the industry the way OpenAI did? Only time will tell.

Author